The proliferation of Generative AI, including models like ChatGPT, has taken the world by storm. The technology has become a hot topic of conversation in both tech circles and among the general public. There’s an undeniable sense of excitement about its new capabilities. This excitement is evident in the number of predominantly positive articles and books about AI that have taken over websites and flooded bookstores. Amid this techno-optimistic wave, companies are scrambling to incorporate new AI into their products. They are often keen on riding the hype train to keep up with competitors and to meet growing consumer expectations.

However, it is crucial to temper our enthusiasm with caution. While it is easy to get swept up in the tide of AI’s transformative potential, we must also address its possible downside. Like any powerful force, AI too has a “dark side” – a potential for misuse and unintended consequences that could be as large, if not larger than the benefits it brings.

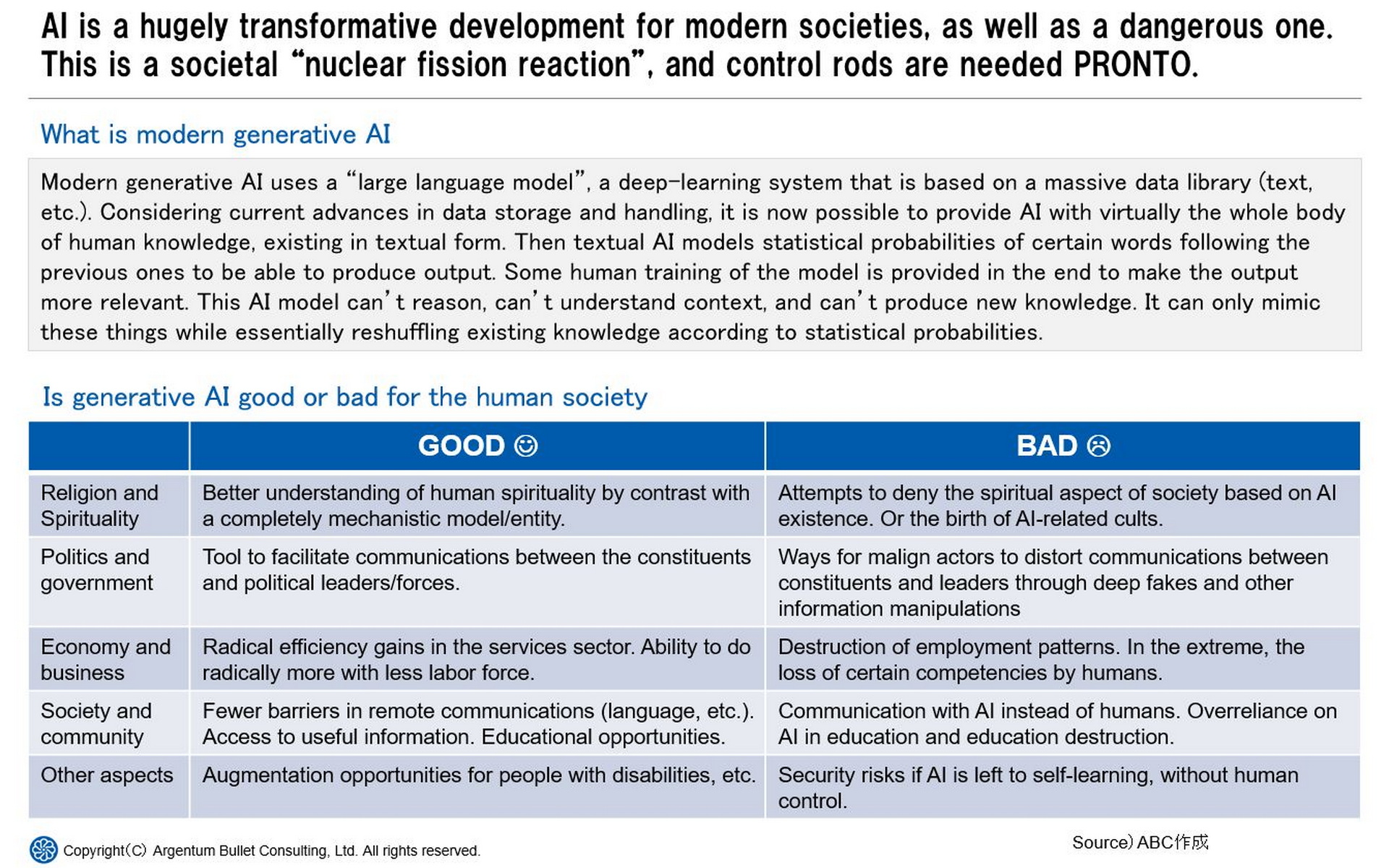

First of all, what is current generative AI. Modern generative AI uses a “large language model”, a deep-learning system that uses a massive data library (text, etc.). Considering current advances in data storage and handling, it is now possible to provide AI with virtually the whole body of human knowledge, existing in textual form. Then textual AI calculates statistical probabilities of certain words following the previous ones to be able to produce output. The humans than train the model to some extent to make the output more relevant.

This AI model can’t reason, can’t understand context, can’t produce new knowledge, and can’t exercise moral judgement . It can only mimic these things while essentially reshuffling existing knowledge according to statistical probabilities. Any mistake in these “reshuffling” algorithms may produce very negative consequences.

In the name of balanced discussion, let’s think about both the good and the bad that generative AI models can bring to modern human society. We have tried to examine possible influences in five different aspects: Religion and Spirituality, Politics and Government, Economy and Business, Society and Community, Other aspects.

On the positive side, AI holds immense potential to revolutionize numerous sectors. It can automate tedious tasks, augment human capabilities, and enable us to make more informed decisions. By learning patterns in data faster than any human could, AI can contribute to advancements in fields ranging from healthcare to science, from education to entertainment.

However, the prospect of these positive impacts should not blind us to the risks associated with this powerful technology. Concerns about AI include further de-humanization of the society, the automation of jobs leading to unemployment, biases in AI decision-making, invasion of privacy, and the potential for AI-generated deepfakes that can manipulate public opinion or commit fraud. Generative AI models in education can destroy the very process of learning and education itself if we do not provide proper safeguards.

Moreover, bad actors can use AI maliciously. Like any tool, if it falls into the wrong hands, it can cause unintended consequences. Also, errors in the models can produce unintended results.

To mitigate these risks, it is essential that AI development includes a robust framework for ethical considerations, regulatory oversight, and public transparency. It is our collective responsibility to ensure that the growth of AI is guided by principles that prioritize public welfare and justice.

As we push the boundaries of this technology, it is essential to encourage open discussion about the potential negative consequences. This will foster a better understanding of the implications of AI, inform policy and regulation, and guide us toward a future where AI is used responsibly and ethically. It is not just about moving forward with AI, it is about moving forward wisely.